Predicting building energy consumption with tensorflow

So, it is my first post so I probably should introduce myself briefly. I am currently studying in Loughborough University, UK under the London-Loughborough Centre for Doctoral Training in Energy Demand. Before that I did stuff in different industries mostly related to energy, in short. If you really are so interested, see my profile on LinkedIn or Twitter. Skip the next paragraphs if you want to just skip to the technical coding part.

One of the things that has struck me during these past years is the widening gap between applying computer science to other engineering disciplines. The simple reasons seems to be that computer science has progressed so rapidly that engineers haven’t been able to keep up. We do not really seem to know how to use the opportunities properly while computer science engineers do not know what to use their skills for. And we end up with this situation where our brightest minds and cutting edge technologies are used to maximizing the impact of internet ads… But let’s not go there now.

What I want to do is to bring computational techniques a bit closer to “real-life” and see how to realize their value and what could be a better application than energy-use with all its complexities. So more or less as a hobby I have been developing my hands-on skills in utilising different digital technologies and wish to share what I have been up to and hopefully share ideas with like-minded people.

First of all, I am a mechanical engineer by background without extensive background in coding so I apologies for all the messiness and errors which I will be making. As you will notice the approach is to get stuff work, instead of focusing on the nitty-gritty. I hope that this approach provides some value, especially for those who are (like me) not so into the “under-the-hood” things. Applications first!

Let’s get started. What I tried to do was to use a Python package called tensorflow for creating models for energy consumption of a building. I wanted to have a look at whether I could use tensorflow to create a simple trainable model for predicting energy consumption of a building by using outdoor temperatures. I basically followed the tensorflow tutorial structure but tweaked the code to better suit the energy domain. So really nothing revolutionary or advanced but definitely a start. A big thanks also to Arthur Juliani for his tutorials, they helped me a lot.

The full code and the files are available in github. My next plan will be to develop the code more modifiable and versatile. I also might try to create a learning controller by using Raspberry Pi3 along with tensorflow. I want to see how easy it would be to create an IoT device embedded with machine learning capabilities for the energy-specific domain. Feel free to contact if you have any inputs, critique, ideas or comments — I would be happy do discuss further!

Below is the main programme which calls the functions in pred_func.py during various stages. The workflow is pretty intuitive, first the data is handled, turned into inputs that tensorflow can use. The out-of-box estimators provided by tensorflow are then used to train two models, a linear regression model and a deep neural network with two hidden layers. After training the model is evaluated. Finally, outputs of the evaluations are also printed and plotted.

The main programme.

| # -*- coding: utf-8 -*- | |

| import tensorflow as tf | |

| import numpy as np | |

| import matplotlib.pyplot as plt | |

| from pred_func import * | |

| #import functions from estimator.py to this file | |

| def main(): | |

| ## Data format - define headers etc. ## | |

| header_in = ['Time', 'Power', 'AirTemp', 'RH', 'Pressure', 'Total', 'Diffuse', | |

| 'Total', 'Diffuse', 'Temp', 'Speed', | |

| 'Dir', 'Rain', 'Voltage', 'Solar Total', 'Solar Diffuse', | |

| 'Total','Diffuse', 'Rain', 'Dir.Avg2', 'Dir.Std2', 'WindClass2.0', 'WindClass20', | |

| 'WindClass20', 'WindClass20', 'WindClass20', 'WindClass20', 'WindClass245', 'WindClass245', | |

| 'WindClass245', 'WindClass245', 'WindClass245', 'WindClass245', 'WindClass290', 'WindClass290', | |

| 'WindClass290', 'WindClass290', 'WindClass290', 'WindClass290', 'WindClass2135', 'WindClass2135', | |

| 'WindClass2135', 'WindClass2135', 'WindClass2135', 'WindClass2135', 'WindClass2180', 'WindClass2180', | |

| 'WindClass2180', 'WindClass2180', 'WindClass2180', 'WindClass2180', 'WindClass2225', | |

| 'WindClass2225', 'WindClass2225', 'WindClass2225', 'WindClass2225', 'WindClass2225', | |

| 'WindClass2270', 'WindClass2270', 'WindClass2270', 'WindClass2270', 'WindClass2270', | |

| 'WindClass2270', 'WindClass2315', 'WindClass2315', 'WindClass2315', 'WindClass2315', | |

| 'WindClass2315', 'WindClass2315'] | |

| header_out = ['Time', 'Pulse'] | |

| fn_in = 'weatherdata.csv' | |

| fn_out = 'gasdata.csv' | |

| col_in = 'AirTemp' | |

| col_out = 'Pulse' | |

| split = 360 | |

| # Parameters - try changing these and see what happens | |

| learning_rate = 0.1 | |

| batch_size = 5 | |

| # Determine if linear or dnn models are trained 0=no training, 1=training | |

| # Note: training data is shuffled and model data updated everytime training is conducted | |

| train_lin = 1 | |

| train_dnn = 1 | |

| # Run the defined functions to get the data in tensor format | |

| ds = read_data(fn_in, fn_out,header_in, header_out, col_in, col_out) # create data-set | |

| (train_x, train_y), (test_x, test_y) = train_test_data(ds,col_in,col_out,split) #create test and train data | |

| train_feats, train_labels = train_tf(train_x, train_y, batch_size) # construct the tensors for training | |

| test_feats, test_labels = evaluate_tf(test_x, test_y, batch_size) | |

| feature_columns = [ | |

| tf.feature_column.numeric_column(key='AirTemp') | |

| ] | |

| print('---------------Feature columns-----------------') | |

| print(feature_columns) | |

| print('%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%') | |

| """Build a linear regression model. You can test around with these.""" | |

| predictor_lin = tf.estimator.LinearRegressor( | |

| config=tf.estimator.RunConfig(model_dir='./linear_model/', save_summary_steps=100), | |

| feature_columns=feature_columns) | |

| """" | |

| Build 2 hidden layer DNN with 3, 1 units respectively. Test around with the number of nodes and see what happens. | |

| Is it possible to find an optimum? Also change the linear regularization strengths and see what happens. | |

| """ | |

| predictor_dnn = tf.estimator.DNNRegressor( | |

| config=tf.estimator.RunConfig(model_dir='./dnn_model/', save_summary_steps=100), | |

| feature_columns = feature_columns, | |

| hidden_units=[3,3], | |

| optimizer=tf.train.ProximalAdagradOptimizer( | |

| learning_rate=learning_rate, | |

| l1_regularization_strength=1.0, | |

| l2_regularization_strength=0.1 | |

| )) | |

| """"Note: the model data is pushed to folders linear_model and dnn_model.""" | |

| # train the models | |

| if train_lin == 1: | |

| print('%%% Training linear model %%%%') | |

| predictor_lin.train(input_fn =lambda:train_tf(train_x,train_y,batch_size)) | |

| else: | |

| print('%%% Linear Model not trained %%%%') | |

| if train_dnn == 1: | |

| print('%%% Training DNN %%%%') | |

| predictor_dnn.train(input_fn =lambda:train_tf(train_x,train_y,batch_size)) | |

| else: | |

| print('%%% DNN not trained %%%%') | |

| # Evaluate the models - print the outcomes | |

| print('--------%%%%%%%%%%%%%%%%%---------') | |

| print('--------Linear model evaluation---------') | |

| eval_result_lin = predictor_lin.evaluate(input_fn =lambda:evaluate_tf(test_x, test_y, batch_size)) | |

| print(eval_result_lin) | |

| print('--------%%%%%%%%%%%%%%%%%---------') | |

| print('--------DNN model evaluation---------') | |

| eval_result_dnn = predictor_dnn.evaluate(input_fn =lambda:evaluate_tf(test_x, test_y, batch_size)) | |

| print(eval_result_dnn) | |

| print('--------%%%%%%%%%%%%%%%%%---------') | |

| """The predictions""" | |

| """Make some predictions either by using some random values or using the evaluation data""" | |

| #predict_x = {'AirTemp': [10, 0, -5, 20, 25, -20], | |

| # } | |

| predict_x = test_x | |

| #predict_y = {'Pulse': [0, 0, 0, 0, 0, 0]} | |

| predictions_lin = predictor_lin.predict(input_fn = lambda:evaluate_tf(predict_x, batch_size=batch_size)) | |

| predictions_dnn = predictor_dnn.predict(input_fn = lambda:evaluate_tf(predict_x, batch_size=batch_size)) | |

| pred_arr_lin = [] | |

| pred_arr_dnn = [] | |

| for pred_dict_lin in zip(predictions_lin): | |

| pred_arr_lin = np.append(pred_arr_lin,pred_dict_lin[0]['predictions']) | |

| for pred_dict_dnn in zip(predictions_dnn): | |

| pred_arr_dnn = np.append(pred_arr_dnn,pred_dict_dnn[0]['predictions']) | |

| """ Plot and print predictions""" | |

| print('--------%%%%%%%%%%%%%%%%%---------') | |

| print('--------Prediction input---------') | |

| print(predict_x['AirTemp']) | |

| print('--------%%%%%%%%%%%%%%%%%---------') | |

| print('--------%%%%%%%%%%%%%%%%%---------') | |

| print('--------DNN model predictions---------') | |

| print(pred_arr_dnn) | |

| print('--------%%%%%%%%%%%%%%%%%---------') | |

| print('--------%%%%%%%%%%%%%%%%%---------') | |

| print('--------Linear model predictions---------') | |

| print(pred_arr_lin) | |

| print('--------%%%%%%%%%%%%%%%%%---------') | |

| print('%%%%%%%%%----Plot Predictions----%%%%%%') | |

| plt.figure(figsize=(11.69,8.27)) | |

| plot_lin = plt.plot(predict_x['AirTemp'], pred_arr_lin, 'x', label="linear") | |

| plot_dnn = plt.plot(predict_x['AirTemp'], pred_arr_dnn, 'x', label="dnn") | |

| plot_meas = plt.plot(predict_x['AirTemp'], test_y,'x', label="measured") | |

| plt.legend() | |

| plt.xlabel("Temperature [C]") | |

| plt.ylabel("Gas consumption [kWh]") | |

| plt.title("Temperature and gas consumption") | |

| plt.savefig("predictions") | |

| print('--------%%%%%%%%%%%%%%%%%---------') | |

| return[] | |

| main() | |

The pred_func.py defines the functions needed in the main programme. The read_data function reads the data from the csv-files and returns the testing and training data sets. The training and testing data is created from our original data by randomizing the data and then splitting it.

Functions train_tf and evaluate_tf are more interesting, they basically define the training and evaluation methods used. In tensorflow “features” is the input to our model, i.e. air temperature in this case. “Labels” are the outputs, gas consumption. In training data is shuffled, batched and used to map the input with the outputs. Evaluation works similar to training except that only features are input and then the model is used to predict the labels. For now the basic tensorflow methods are used to keep this straight-forward.

A cool feature of tensorflow is tensorboard which can be used to visualize the models created in tensorflow. Just modify the following code to point to your directory with the python files, run it and open the page https://your_hostname:6006 on your browser to check it out. It provides interesting visualizations regarding the structure and training of the models.

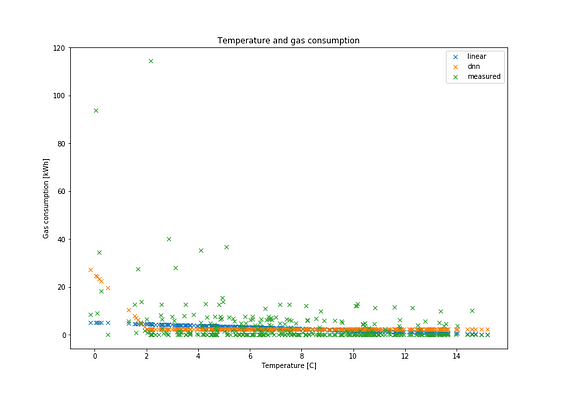

So, how did the models do? The DNN-model was run with a learning rate of 0.3, batch size was five for both models and regularizations were set to zero since I wanted to demonstrate the difference to traditional linear regression. Interestingly the DNN-model predicts a significant step-change in gas consumption temperatures are low and can thus provide such features for a data-driven modeller. If the underlying data were more interesting we might see more features in our model but since it was pretty straight-forward we only see this one major difference to the linear model.

However, as is apparent from the figure, not much data existed for those very low temperatures and since regularization was set small the outliers can sway the model quite a lot and the models really are as good as was the data used to train them. Thus the results should be interpreted always with some skepticism, especially when data can be extremely noisy or hard to acquire as is typically the case with buildings.

We have built a very simple gas consumption predictor using tensorflow, so what? The point was to show that applications of AI and machine learning are not as far away as some might think. I believe these applications will also break-through to the energy sector where quantities of gathered data increase rapidly. This applies especially to the demand-side as it becomes an active component of the system.

This development is already driven forward by regulation which is making aggregation of flexible demand, real-time trading of energy and deeper integration of small-scale renewables increasingly common. I wish that these developments are used for the common good of us and the environment by creating a healthy energy system characterized by words like efficient, democratic and reliable. But equally machine-learning will probably have a role in any future scenario, also the more ominous ones.

From a practitioner to practitioners,

-Eramismus

This post was originally published in Medium

I am a practical Finn with interests spanning energy, digitalisation and society. I am currently working towards a PhD in England. Among other things I try to explore the layered nature of sustainability through philosophy and technology.