Towards Flexineering: Plans for Pilot Experiments

The next heating season is approaching and my project is moving to the next level, experiments in test homes! I wanted to briefly outline the things I am aiming to get done over the summer with my pilot as I build skills as a Flexineer (this will become a thing). If you want to avoid the technicalities, just skip straight to the end. What follows next is some basic background on model-predictive control, ARX-models and system identification.

Model-Predictive Control

What is model-predictive control you ask? The concept of model-predictive control (MPC) is simple, models are used to deliver optimal control decisions. In the context of buildings a model of a building with information factors like weather, indoor temperature requirements are used to find best courses of action in a given situation through optimisation. In addition to the model, an important element is the objective function, a mathematical representation of what the controller aims to do.

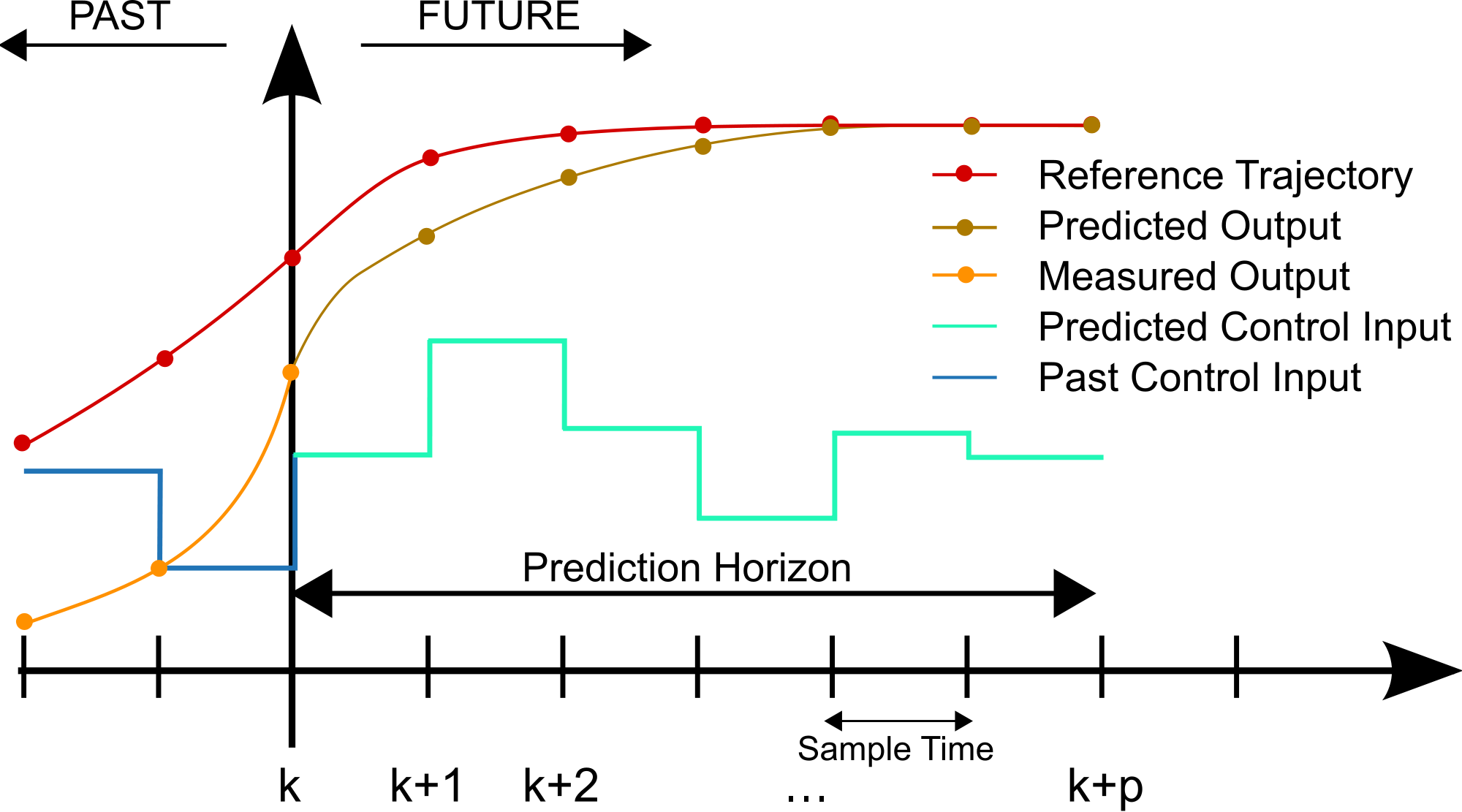

The figure below demonstrates the basic principle. MPC produces optimal control decisions over a set period of time based on data it gets from the system. There is a whole lot of examples of MPC and its use in a bunch industries as well as buildings. But so far its use for flexibility has been quite limited, especially experimental examples are still lacking. My humble opinion is that MPC will be the way to go if we wish to realise a vision of smart grids while maintaining reasonable level of control and comfort. Practically MPC is already here, as shown by the advent of smart thermostats for example.

So what is so great about MPC? Currently in most buildings controls are relatively simple rule-based controls which means they follow sets of simple rules, for example “be ON 5 - 11 pm and OFF otherwise”. Or with a thermostat something like “maintain temperature at 21 degrees 9-11 am and 5-11 pm, otherwise be OFF”.

The issue with rule-based control comes from it being rigid and are meant to simply supply a well-defined level of service, heat when it is needed. But, also other goals exist which systems could also aim for such as minimising energy consumption or cost, or to reducing disruptions and malfunctions. However, what speaks for rules is naturally ease in their design and implementation since they require significantly less complex computations and are hence easier to trouble-shoot.

When heating buildings and especially when using them to deliver demand response, being able to predict circumstances gives an advantage compared to rules. Example: it is known that heat is needed in a one hour and energy is cheap right now so it makes sense to heat the home now, use the capability of the building to retain heat, and avoid a high spike in use of energy when it is expensive. With MPC and defining its objective function can actually be realised, hence it has pretty good potential to enable buildings become more flexible.

ARX-models

A key component of MPC is the M, the model. To perform optimum control and predictions, a model of the system is needed. And in this case the system is the building and its heating systems. A big issue in this modelling task is scalability, an engineer can spend hours finding a really good model but for controls this should be done very quickly with minimal human intervention.

Hence, I am going with data-driven ARX-models which are rooted in time series analysis and statistics. ARX comes from the word monster Auto-Regressive model with eXogenous inputs. Auto-regressive means the model uses previous (regressed) values of the parameter it predicts in the model. Exogenous inputs are just other inputs than the regressed terms.

ARX-models are purely defined with data, only the parameters of the model are chosen and then defined by fitting the model to training data. So, say we wish to construct a model to predict indoor temperatures, the parameters could for example be indoor temperatures from the last hour (auto-regressive terms), outdoor temperature and heat input by the heating system (exogenous terms).

A range of other modelling techniques also exists, people have used for example neural networks and physics-based RC models (for examples check this out). What however speaks for ARX-models is their relatively simple nature, existence of good techniques for fitting models and linearity. The last bit is important for optimisation.

System Identification

System identification is a tricky part of MPC. During system identification both the structure and the parameters of the model need to be defined. With ARX models defining the structure I mean choosing the lagged terms and exogenous inputs to use. Parameters that need to be defined then are the respective coefficients for each term chosen for the model to produce a well-performing model.

This is a balancing act, where one wants to find a balance between accuracy and simplicity. Adding too many inputs typically causes over-fitting which means that the model would match really well a training data-set but would not be general, i.e. be good at predicting outside of the training data-set. The simplicity of the model is important to maintain. This especially case in control applications since we wish to have simple models to make optimisation and computation as effortless as possible.

In system identification the overall training process and how uncertainty or changing conditions are accounted for become very important. For example, should we use a complex model that is able to predict behaviour comprehensively or rather shift between models during different situations? What about when dynamics rapidly change in a way that could not be foreseen? A model could suddenly lose its prediction power if the underlying fundamentals change.

To achieve a well=performing MPC-controller, I am now considering to use two-phased identification. In the first phase a set of historic data is used to determine the structure and initial parameters for the ARX-models. In the second phase I during control operation continually update model parameters based on success of the predictions.

I won’t here go into more detail but if you wish to find out more I recommend you read these:

A great book on MPC in buildings: M. Maasoumy and A. Sangiovanni-Vincentelli, “Smart Connected Buildings Design Automation: Foundations and Trends,” Found. Trends® Electron. Des. Autom., vol. 10, no. 1–2, pp. 1–143, 2016. Link

Good academic paper on system identification: P. Radecki and B. Hencey, “Online Model Estimation for Predictive Thermal Control of Buildings,” IEEE Trans. Control Syst. Technol., vol. 25, no. 4, pp. 1414–1422, 2017. Link

Plan for Pilot Experiments

Finally, the experimental work. So, first I need to do calibrations of sensors and test all my computers before I deploy them in the test homes. This will mean two things, I need to both set up the data collection and the controls.

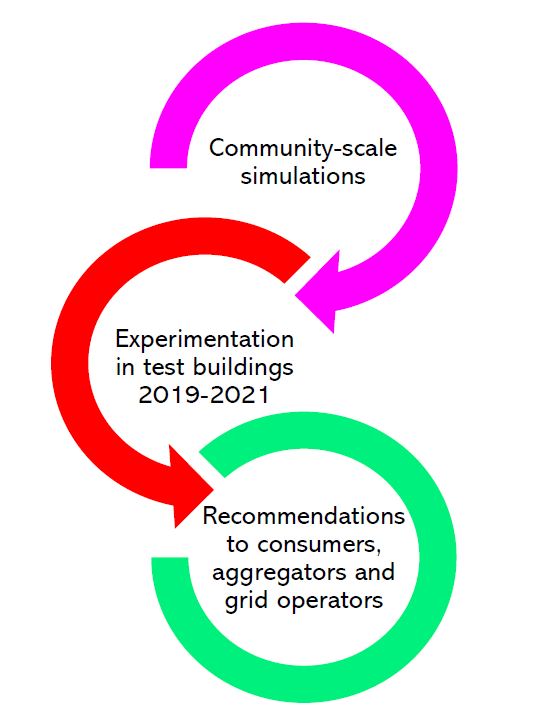

In the pilot study I will be looking into different system identification techniques and how they work in the building. I want look into how would sensoring affect the system identification and how often should models be recalibrated or updated to maintain good prediction and control performance. I also will investigate how system identification is affected by other factors, such as people behaviour, physical properties of the building and weather.

In the pilot experiments I am taking the approach of building a minimum viable product, MVP for my research. I will use simple electrical fan heaters which are relatively straight-forward to control with plug switches to represent heating systems of a home. After that I will move on to controlling a real heat pump.

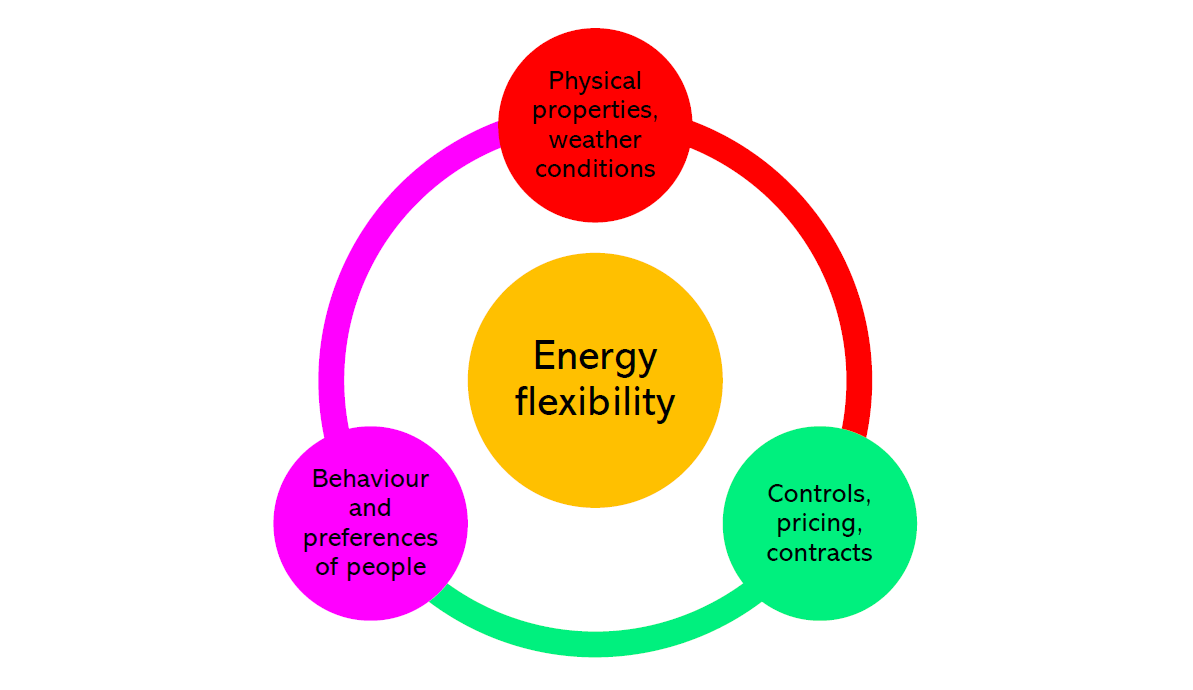

All this is building up towards a set of experiments over the next heating seasons. Through my main experiments I will start comprehensively looking into flexibility factors. Factors that would define flexibility potential of a given building. For example, there are the physical aspects, for example capability of the building to retain heat, the people, how much heat they need and when they need it, and the cyber like the control algorithm, computational set up and data gathering implementations. However, how these factors interplay which each other will require some real data to derive conclusions.

Through defining factors of flexibility, I wish to build foundation for actually assessing flexibility potential and make the whole concept more tangible to allow varying parties start benefitting from it.

But more about that later. I also thinking to come out at some point with a more technical post where I share some of the code to run through them in a digestable and approachable manner so someone could pick them up for further development.

Until next time!

-Eramismus

References

MPC figure: M. Behrendt, “MPC scheme basic,” Wikimedia Commons, 2009. [Online]. Available here.. [Accessed: 15-Aug-2018].

[1] James D. Hamilton, Time series analysis. Princeton University Press, 1994. Link

[2] M. Maasoumy and A. Sangiovanni-Vincentelli, “Smart Connected Buildings Design Automation: Foundations and Trends,” Found. Trends® Electron. Des. Autom., vol. 10, no. 1–2, pp. 1–143, 2016. Link

[3] P. Radecki and B. Hencey, “Online Model Estimation for Predictive Thermal Control of Buildings,” IEEE Trans. Control Syst. Technol., vol. 25, no. 4, pp. 1414–1422, 2017. Link

[4] R. S. El Geneidy and B. Howard, “Review of techniques to enable community-scale demand response strategy design,” uSim2018 - Urban Energy Simul., 2018. Link

I am a practical Finn with interests spanning energy, digitalisation and society. I am currently working towards a PhD in England. Among other things I try to explore the layered nature of sustainability through philosophy and technology.